Machine Learning: A Journey Towards General-Purpose Algorithms

BY BRANNON DORSEY

Something radical is happening right now with Artificial Intelligence. It appears that we may now be on the verge of an unprecedented paradigm shift in how we think about and employ computers. For decades, we’ve been writing programs in the form of line-by-line instructions. We are now beginning to move away from these limitations, instead teaching machines to learn on their own.

This shift is largely happening in a field known as Machine Learning, and here at Branger_Briz, we are excited to be a part of this radical moment in human discovery. Over the coming weeks and months, we will be reading research papers, experimenting with state-of-the-art algorithms and code implementations, and participating in shaping this new wave of Artificial Intelligence research through a lense of creative production. As excited as we are about the present and upcoming Machine Learning revolution, we also Machines are beginning to see, hear, and understand the world around them in ways and volumes that humans simply can’t believe that the radical disruption that it may bring has the potential to further a dangerously widening digital literacy divide. We intend to combat this divide by sharing the research and projects that we create in a way that is democratized and accessible. This blog post is an introduction to the current state of Machine Learning and our engagements with it. More posts on the implications of an AI overhaul will soon follow.

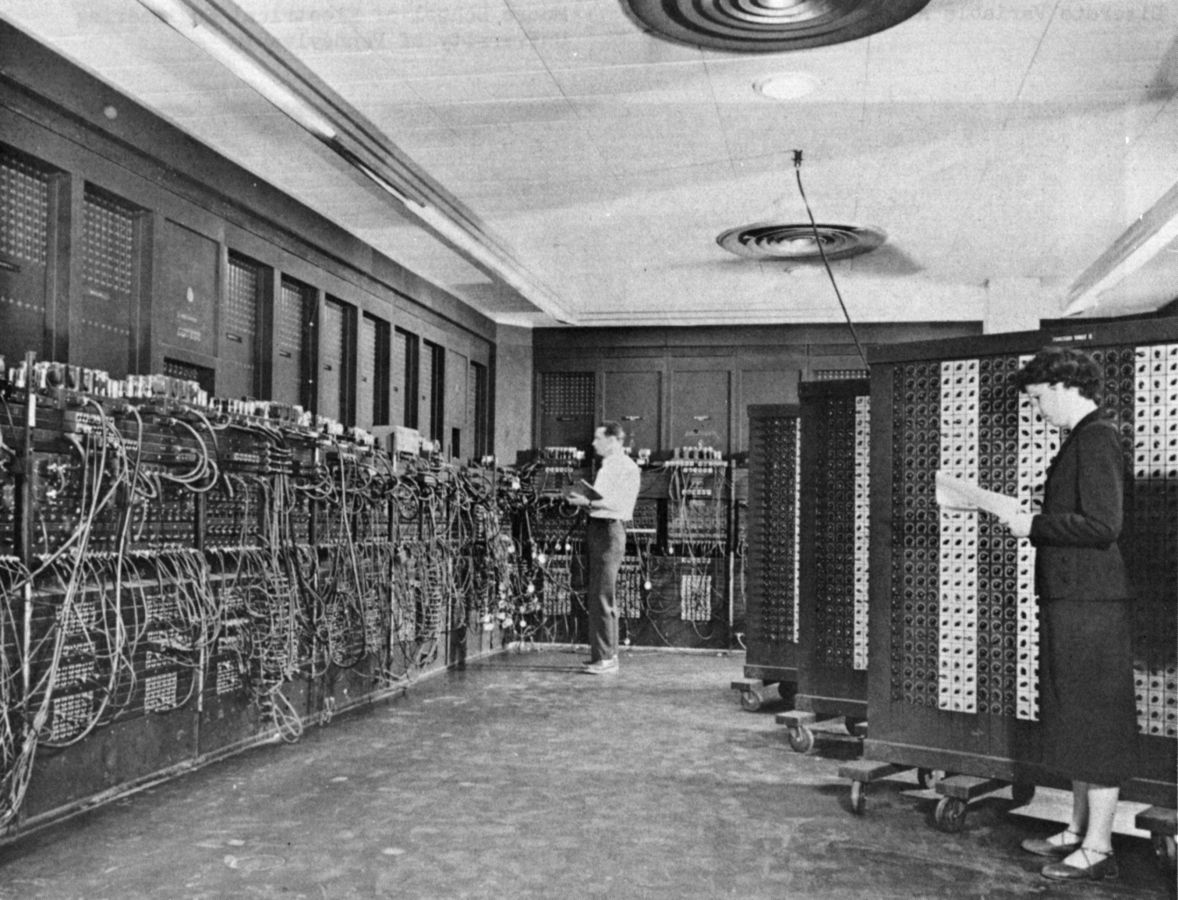

Recent advances in the field of Machine Learning, specifically in the field of Deep Learning, have continued to achieve human (and superhuman) performance at the most complex of tasks at an increasingly accelerating rate. Since the advent of the modern electronic computing machine during the early 20th century, we’ve seen the growth and application of computers into nearly every domain of human life. In nearly 75 years, we’ve transformed the state-of-the-art electronic computer from a specialized institutional instrument into a general-purpose handheld device that over two billion of the world’s population carry on their person at all times. With perhaps humanity’s greatest collaborative achievement we’ve created a global communications network for these devices to instantaneously communicate with each other, and on top of this network, us with one another. By linking nearly half of the World’s population the Internet has amplified and extended the human voice.

Decades of achievement in this regard have been built on top of centuries of scientific research to bring us to this contemporary technological epoch. Nonetheless, the programming of the machines that drive today's world has remained, for the most part, fundamentally unchanged from the rule-based heuristic approaches of writing hand-authored software from the years of punch cards and room-sized computers. Until recently.

Neural networks are becoming increasingly able to synthesize sequential patterns like text, speech, and even music.Machine Learning, is a type of AI focused specifically on developing algorithms and systems that allow machines to learn rather than being explicitly told how to function via a set of programmed instructions. While the field has been around since the mid-1960’s, its true potential is only now beginning to be fully exploited. In the past 3 years specifically, we’ve seen a series of scientific breakthroughs in the use of Deep Learning — a branch of Machine Learning that uses multiple stacked layers of processing units, often called artificial neural networks (ANN) , to form increasingly abstract representations of information and understanding. These neural networks are now solving problems that were expected to be decades away if solvable at all.

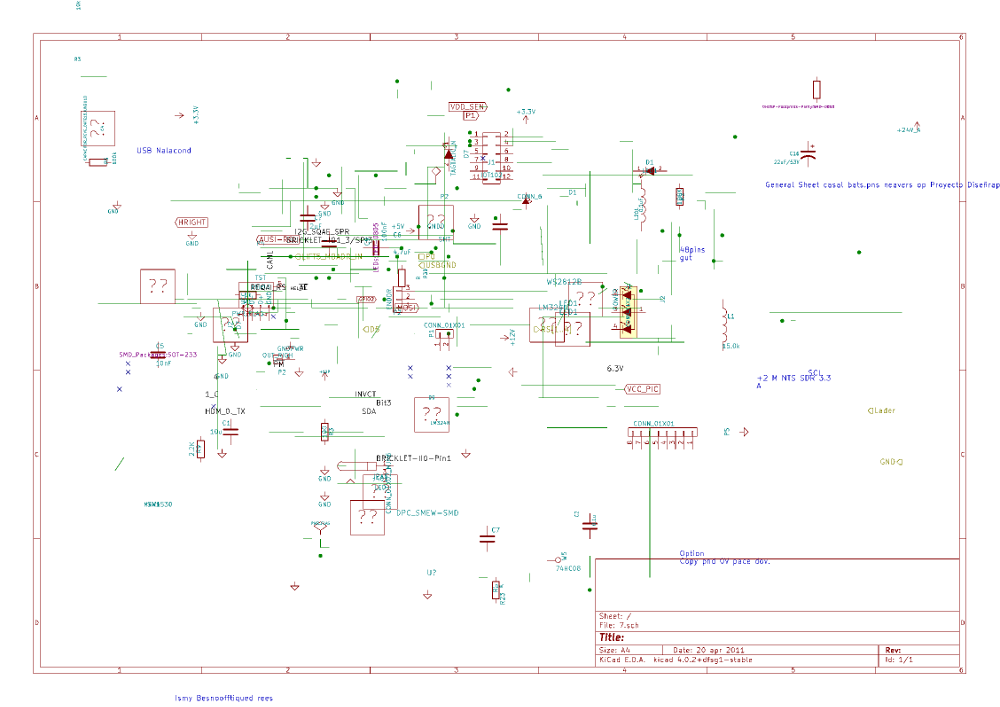

What makes this rise in Deep Learning so exciting is that it represents a shift away from rule-based programming, a tedious process whereby human programmers provide explicit instructions to be carried out by a machine, and instead towards a sort of general-purpose algorithm, the neural networks. Let that sink in for a second… Traditionally, if a programmer wanted to implement a face detection algorithm for automatic shutter release built into the proprietary firmware of a digital camera, they would have to spend months researching state-of-the-art computer vision and object detection algorithms, or at least consult a specialist in the field. The programmer would then implement the formulas that they have learned through that process in code. If that same programmer were then asked to implement speech recognition for that same camera so that images could be captured via voice commands, they would need to repeat the tedious process of acquiring adequate understanding of proven algorithms in the domain of audio processing and computational linguistics. With Machine Learning, given enough quality example data, the same neural network algorithm, or at least a suite of similar network models, can be trained to solve both face detection and speech recognition problems. Moreover, the programmer need not know anything about applied research in either domain so long as they can train the neural network on enough photos with tagged faces and examples of people saying the desired voice command.

With Machine Learning, the same algorithm can be trained to solve both face detection and speech recognition problems.Through recent advancements in Deep Learning, machines are beginning to see, hear, and understand the world around them in ways and volumes that humans simply can’t. Innovations in Convolutional Neural Networks (CNNs) have seen widespread use in batch processing the billions of images that are uploaded to social networks, extracting information that allows them to identify people and contextualize their surroundings to build up a profile of the people that use their services . Self-driving cars use video processed through CNNs to make driving decisions in real-time, directly affecting the lives of their passengers as well as others on the road.

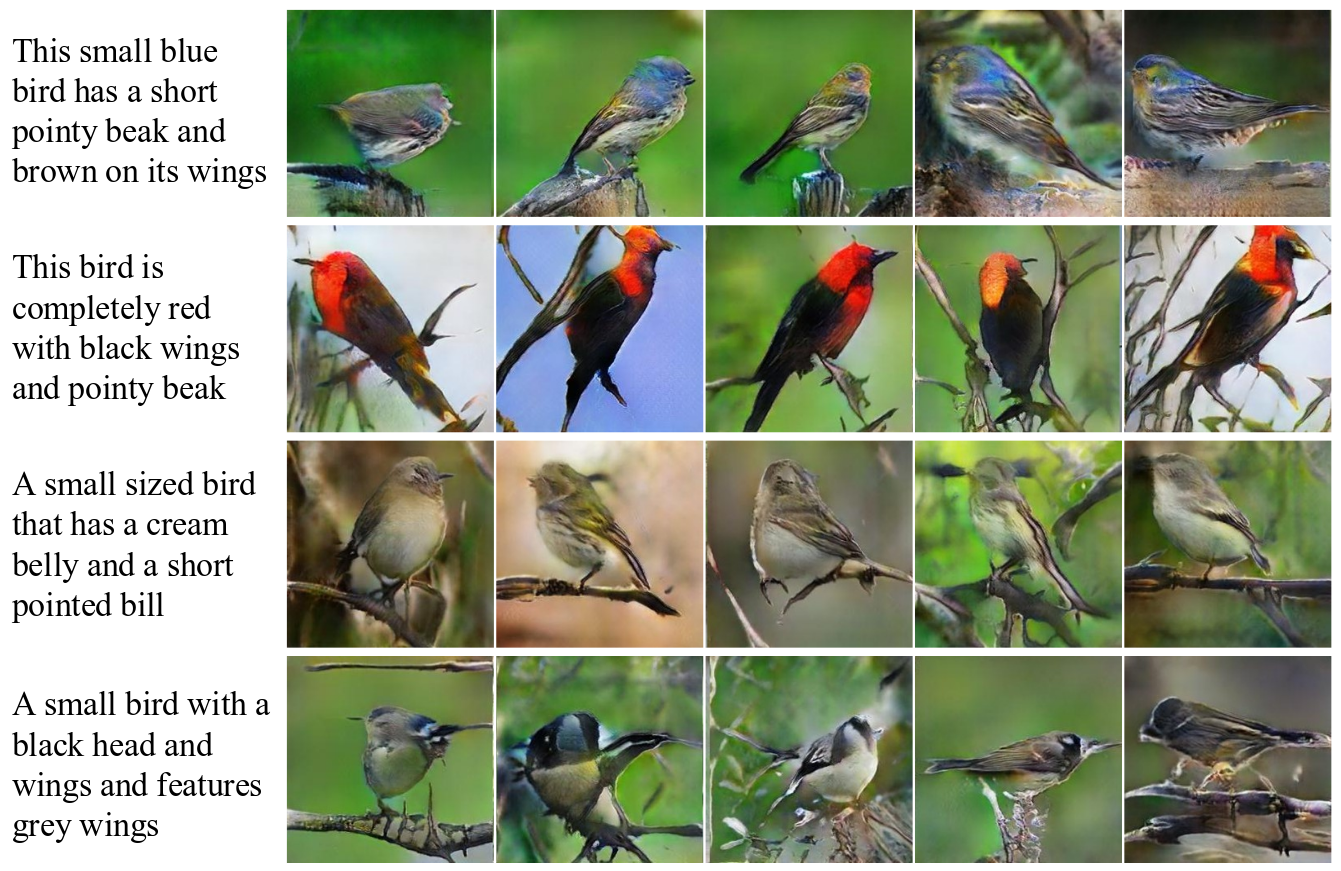

Researchers have applied CNNs along with Reinforcement Learning (RL) to create a general purpose video game Artificial Intelligence model that performs at or exceeds human level in 49 Atari games using only screen pixels as input to the network, a feat that has been heralded by many as a great step towards Artificial General Intelligence (AGI), the holy grail of strong AI . Recent implementations of Recurrent Neural Networks (RNNs), particularly those using Long Short Term Memory (LSTM) processing units as "neurons" are becoming increasingly able to synthesize sequential patterns like text, speech, and even music. Towards this end, perhaps one of the most promising advancements in Machine Learning over the past few years are Generative Adversarial Networks (GANs). GANs, are sophisticated generative models that are able to generate stunningly accurate synthesized images of objects, people, and places among other things. Research into GANs is very new and the results are already overwhelmingly promising.

Have some thoughts to share? Join the public conversation about this post on Twitter, or send us@brangerbriz.com an email, we'd love to hear what you think!